Status: Concluded, Funded by: Samsung Eletrônica da Amazônia Ltda.

Advisor: Prof. Anderson Rocha

Host: University of Campinas

About

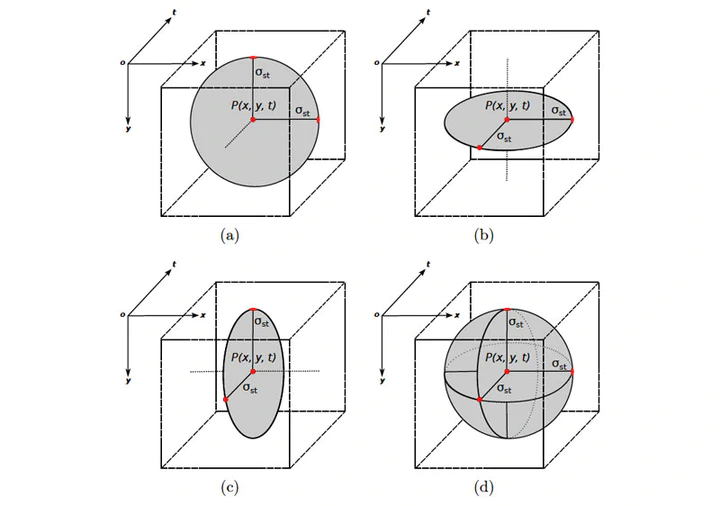

Temporal Robust Features (TRoF) comprise a spatiotemporal video content detector and a descriptor developed to present low-memory footprint and small runtime. It was shown to be effective for the tasks of pornography and violence detection. Please refer to both articles for further technical details.

Usage

TRoF executable is available through a docker image, available here. Prior to running it, you have to install docker (available for various OS platforms). Once docker is running, you have to execute the following scripts, in command line:

your-computer$ docker run -ti dmoreira/trof bash

docker-instance# cd TRoF

docker-instance# ./fast_trof_descriptor

Follow the printed usage instructions for running TRoF. The software reads a video input file and outputs float feature vectors, one per line, in the following format:

x y t v1 v2 v3 ... vn

Pleaser refer to either here or here, if you want to add videos to a running TRoF docker instance.

Citation

If you are using TRoF, please cite:

@article{moreira2016fsi,

title = {Pornography classification: the hidden clues in video space-time},

author={Daniel Moreira and Sandra Avila and Mauricio Perez and Daniel Moraes and

Vanessa Testoni and Eduardo Valle and Siome Goldenstein and Anderson Rocha},

journal = {Elsevier Forensic Science International},

year = {2016},

volume = {268},

number = {1},

pages = {46--61}

}

Disclaimer

This software is provided by the authors as is, with no warranties and no support, for academic purposes only. The authors assume no responsibility or liability for the use of the software. They do not convey any license or title under any patent or copyright, and they reserve the right to make changes in the software without notification.

Acknowledgments

This software was developed through the project “Sensitive Media Analysis”, hosted at the University of Campinas, and sponsored by Samsung Eletronica da Amazonia Ltda., in the framework of the Brazilian law N. 815 8,248/91. We thank the financial support of the Brazilian Council for Scientific and Technological Development - CNPq (Grants #477662/2013-7, #304472/2015-8), the Sao Paulo Research Foundation - Fapesp (DejaVu Grant #2015/19222-9), and the Coordination for the Improvement of Higher Level Education Personnel - CAPES (DeepEyes project).