Abstract

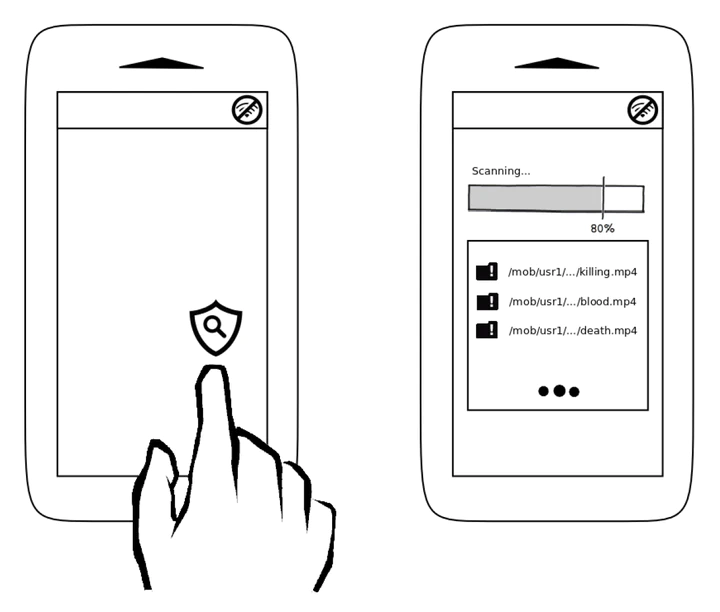

Sensitive video can be defined as any motion picture that may pose threats to its audience. Typical representatives include – but are not limited to – pornography, violence, child abuse, cruelty to animals, etc. Nowadays, with the ever more pervasive role of digital data in our lives, sensitive-content analysis represents a major concern to law enforcers, companies, tutors, and parents, due to the potential harm of such contents over minors, students, workers, etc. Notwithstanding, the employment of human mediators for constantly analyzing huge troves of sensitive data often leads to stress and trauma, justifying the search for computer-aided analysis. In this work, we tackle this problem in two ways. In the first one, we aim at deciding whether or not a video stream presents sensitive content, which we refer to as sensitive-video classification. In the second one, we aim at finding the exact moments a stream starts and ends displaying sensitive content, at frame level, which we refer to as sensitive-content localization. For both cases, we aim at designing and developing effective and efficient methods, with low memory footprint and suitable for deployment on mobile devices. In this vein, we provide four major contributions. The first one is a novel Bag-of-Visual-Words-based pipeline for efficient time-aware sensitive-video classification. The second is a novel high-level multimodal fusion pipeline for sensitive-content localization. The third, in turn, is a novel space-temporal video interest point detector and video content descriptor. Finally, the fourth contribution comprises a frame-level annotated 140-hour pornographic video dataset, which is the first one in the literature that is appropriate for pornography localization. An important aspect of the first three contributions is their generalization nature, in the sense that they can be employed – without step modifications – to the detection of diverse sensitive content types, such as the previously mentioned ones. For validation, we choose pornography and violence – two of the commonest types of inappropriate material – as target representatives of sensitive content. We therefore perform classification and localization experiments, and report results for both types of content. The proposed solutions present an accuracy of 93% in pornography classification, and allow the correct localization of 91% of pornographic content within a video stream. The results for violence are also compelling: with the proposed approaches, we reached second place in an international competition of violent scenes detection. Putting both in perspective, we learned that pornography detection is easier than its violence counterpart, opening several opportunities for additional investigations by the research community.